[Recap] - Uber - Better Load Balancing: Real-Time Dynamic Subsetting

How Uber improved load balancing with real-time dynamic subsetting for millions of containers.

Reference

Overview

Subsetting is a common technique used in load balancing for large-scale distributed systems.

This blog post discusses Uber's service mesh architecture, which has powered thousands of critical microservices since 2016. It highlights the challenges encountered while scaling the number of tasks in the mesh and the issues with the initial subsetting approach.

The post concludes with the development of a real-time dynamic subsetting solution and its successful implementation in production.

Uber Service Mesh

What’s a Service Mesh?

The layer of infrastructure that allows microservices to communicate with each other via remote procedure calls (RPC) without worrying about infrastructure details.

Service Mesh handles discovery, load balancing, authentication, traffic shaping, observability, and reliability.

Uber Service Mesh Architecture

At a very high level, Uber’s service mesh architecture is centered around an on-host L7 reverse proxy:

The caller service sends the RPC to a local port that the host proxy is listening to

The host proxy will forward the RPC directly to a backend task of callee service

On the other side of the diagram, the proxy connects to Control Plane components to decide how to send the traffic again, at a very high level:

Load Balancing At Play

Load Balancing Goals

It make sure that each backend task of a service consumes a similar percentage of available resources—in our context it’s typically CPU time.

We measure the effectiveness of load balancing via CPU load imbalance. We define the CPU load imbalance as the ratio of p99/average CPU utilization of tasks for a given service.

Load Balancing Concepts

What is Subsetting?

Subsetting in the context of load balancing means dividing the set of potential backend tasks into overlapping “subsets,” so that while all backends receive traffic in aggregate, each proxy performs the load balancing on a limited set of tasks.

Legacy Subsetting

When Uber started, a static default subsetting size was used. Each microservice owner could decide to use an override if needed. This worked well initially, but presented a number of challenges over time.

Problems with Legacy Subsetting

Imbalance Due to Random Task Selection

Example:

Service A calling Service B with combined 120 QPS

The static subsetting size is 3 while there are 5 backend tasks for B

An instance of B receives higher traffic.

The diagrams provided are simplified. Proxies don't evenly distribute traffic to all backends. Initially, "least-pending request" load balancing was used, which slightly balanced the subset selection imbalance. However, this wasn't effective enough as each proxy tracked its requests to each backend independently, with only response latency information being implicitly exchanged.

This slightly aided load balancing as overloaded backends responded slower to all proxies, but it didn't significantly impact load balancing due to low container utilization. Moreover, effective load balancing was needed before overload became visible to prevent user impact.

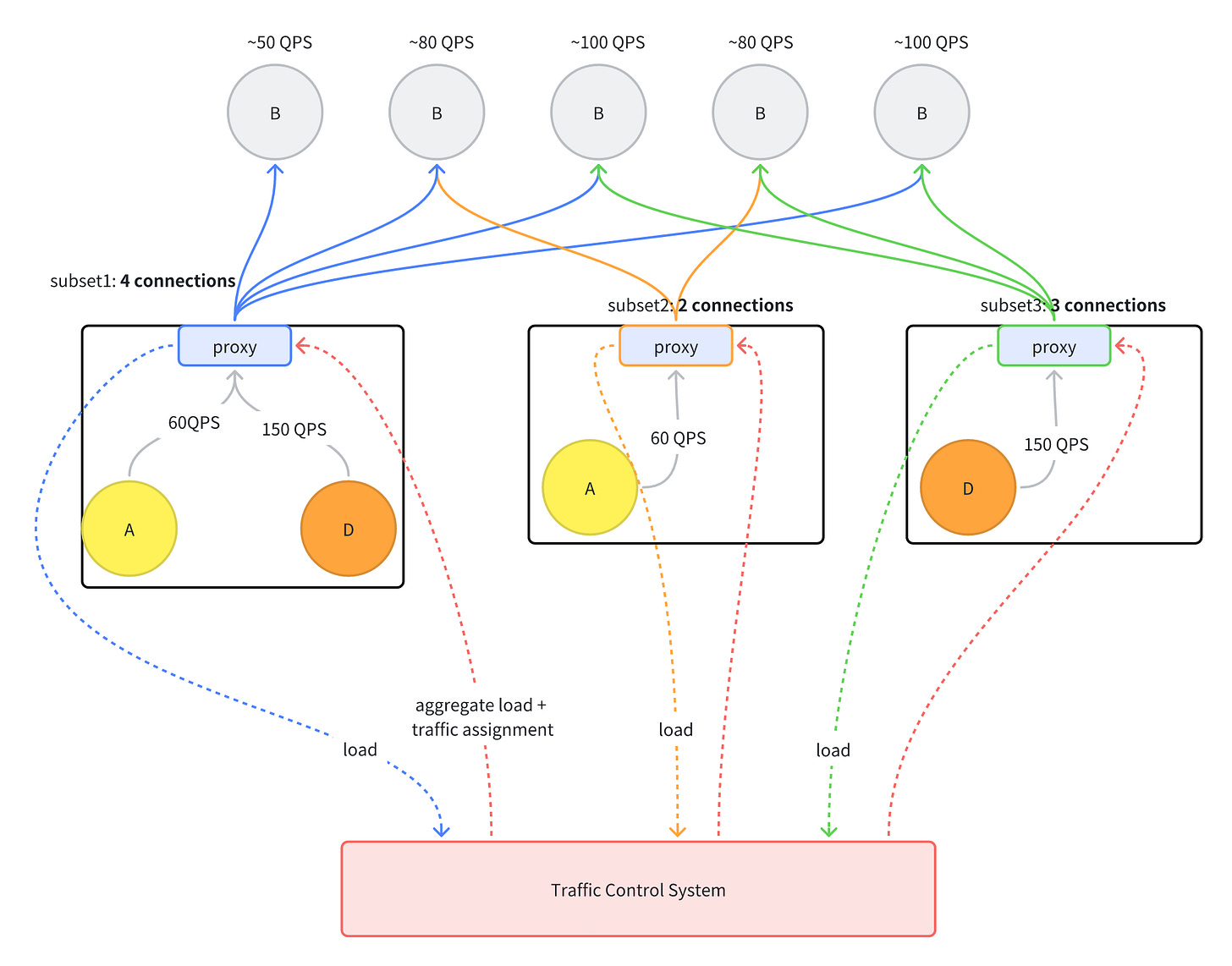

Imbalance Due to Host Co-location

Service A & D co-located on the same host.

Both A & D services calling B

As the service mesh grew, the imbalance issues happened more often.

Improvements with Real-Time Dynamic Subsetting

Architecture

Aggregation Control Plane

On-Host Proxy

At this point, the on-host proxy has 3 relevant pieces of information:

Traffic Assignment: the percentage of traffic sent to each pool

This is passed down from the control plane

Load: the amount of traffic it’s sending to a target service

This is tracked independently by each proxy, with some post-processing to stabilize spiky traffic over time

Aggregate Load (new): the overall traffic a target service is receiving

This is passed down to the proxy from the control plane

The new data enables us to adjust the subset size per proxy nearly in real-time. The desired per pool subset size is recalculated using the formula:

desiredSubsetSize = numberOfTaskInPool * load/(aggregateLoad*assignment) * constantThis implies that proxies generating higher traffic connect to more backends, distributing their load over a larger task subset than less active proxies. This improves load balancing and maintains the subsetting objective (limiting total mesh connections). By tuning the constant, we can control the outbound connections and thus the load balancing.

The simplicity of the math required careful performance work. The proxy, unaware of future schedules or destination services, must monitor subset sizes of many pools constantly. Fortunately, most don't change often, with a typical proxy interacting with only hundreds of pools.

Task selection remains random without proxy coordination, improving but not perfecting load imbalance. The subset size adjustment experiences delayed by a few seconds due to the propagation of load reports between proxies and the control plane.

Rollout and Results

Rollout

The six-month rollout began with internal testing, moved to early adopters, then batch onboarding, and finally, power services with manual settings. The last group was more critical and took more time, requiring individual work with service owners to avoid degradations.

Problems:

The first rollout change was increasing the "minimum subset" size to mitigate issues with heterogeneous callers (a caller with very low RPS that’s disproportionately CPU-heavy) and "slow start".

A few cautious services customized their aperture constant during onboarding. We found that some service owners prefer to run with their own custom, manually managed settings, especially during the initial onboarding

The final issue was the non-minimal cost of maintaining TCP connections in the proxy. A combination of multiple high-RPS instances calling multiple large-backend pools (tens of thousands of tasks) on a single host would cause thousands of open connections. Due to this Go HTTP2 issue it caused high memory usage for the proxy. => solution by capping the maximum number of connections.

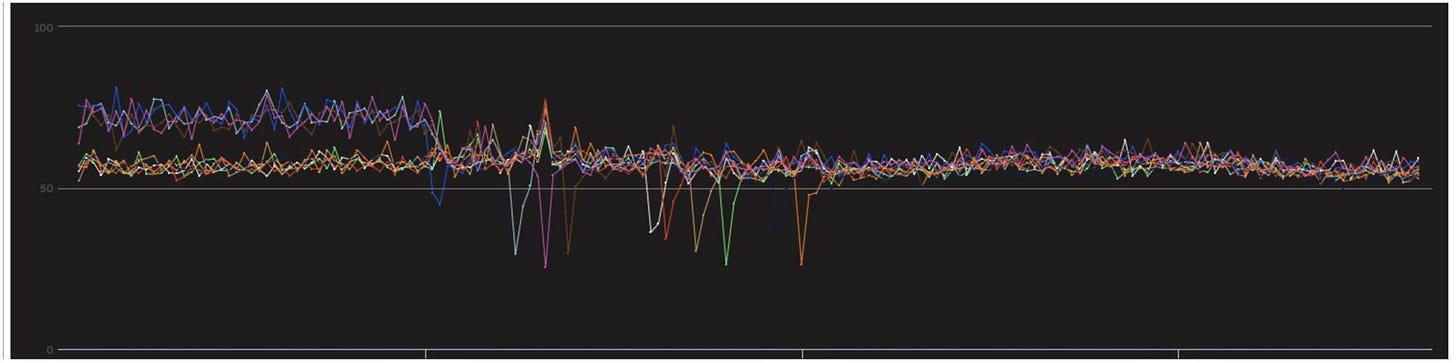

Results

The main success was reduced maintenance cost, with no complaints about subsetting since the rollout. This alone justified the project as it significantly reduced toil.

The project significantly improved load balancing, thus resulting in sizable efficiency wins.

Potential Improvements

Improvements could include reducing connection churn by eagerly increasing and lazily reducing the subset, addressing the issue of low-RPS callers not reaching all backends in large pools by using pool size ratios in subset selection, and replacing random peer selection with deterministic subsetting for better load balance.

We could also achieve higher precision by replacing the random peer selection with deterministic subsetting, but introduce more complexity, as cross-proxy coordination would be required.

Our current implementation aggregates load without distinction. Although tracking load by callers or procedures could improve precision, it would add significant complexity. Accounting for request heterogeneity would require deep request inspection, which is likely too expensive.